Google has introduced that it’s going to start rolling out a brand new characteristic to assist customers “better understand how a particular piece of content was created and modified”.

This comes after the corporate joined the Coalition for Content material Provenance and Authenticity (C2PA) – a bunch of main manufacturers attempting to fight the unfold of deceptive info on-line – and helped develop the most recent Content material Credentials customary. Amazon, Adobe and Microsoft are additionally committee members.

Set to launch over the approaching months, Google says it can use the present Content material Credentials tips – aka a picture’s metadata – inside its Search parameters so as to add a label to photographs which might be AI-generated or edited, offering extra transparency for customers. This metadata contains info just like the origin of the picture, in addition to when, the place and the way it was created.

Nonetheless, the C2PA customary, which supplies customers the power to hint the origin of various media sorts, has been declined by many AI builders like Black Forrest Labs — the corporate behind the Flux mannequin that X’s (previously Twitter) Grok makes use of for picture era.

This AI-flagging might be carried out by way of Google’s present About This Picture window, which suggests it can even be accessible to customers by way of Google Lens and Android’s ‘Circle to Search’ characteristic. When reside, customers will have the ability to click on the three dots above a picture and choose “About this image” to test if it was AI-generated – so it’s not going to be as evident as we hoped.

Is that this sufficient?

Whereas Google wanted to do one thing about AI photographs in its Search outcomes, the query stays as as to if a hidden label is sufficient. If the characteristic works as acknowledged, customers might want to carry out further steps to confirm whether or not a picture has been created utilizing AI earlier than Google confirms it. Those that don’t already know concerning the existence of the About This Picture characteristic might not even notice a brand new software is accessible to them.

Whereas video deepfakes have seen situations like earlier this 12 months when a finance employee was scammed into paying $25 million to a bunch posing as his CFO, AI-generated photographs are almost as problematic. Donald Trump just lately posted digitally rendered photographs of Taylor Swift and her followers falsely endorsing his marketing campaign for President, and Swift discovered herself the sufferer of image-based sexual abuse when AI-generated nudes of her went viral.

Whereas it’s straightforward to complain that Google isn’t doing sufficient, even Meta isn’t too eager to spring the cat out of the bag. The social media big just lately up to date its coverage on making labels much less seen, shifting the related info to a submit’s menu.

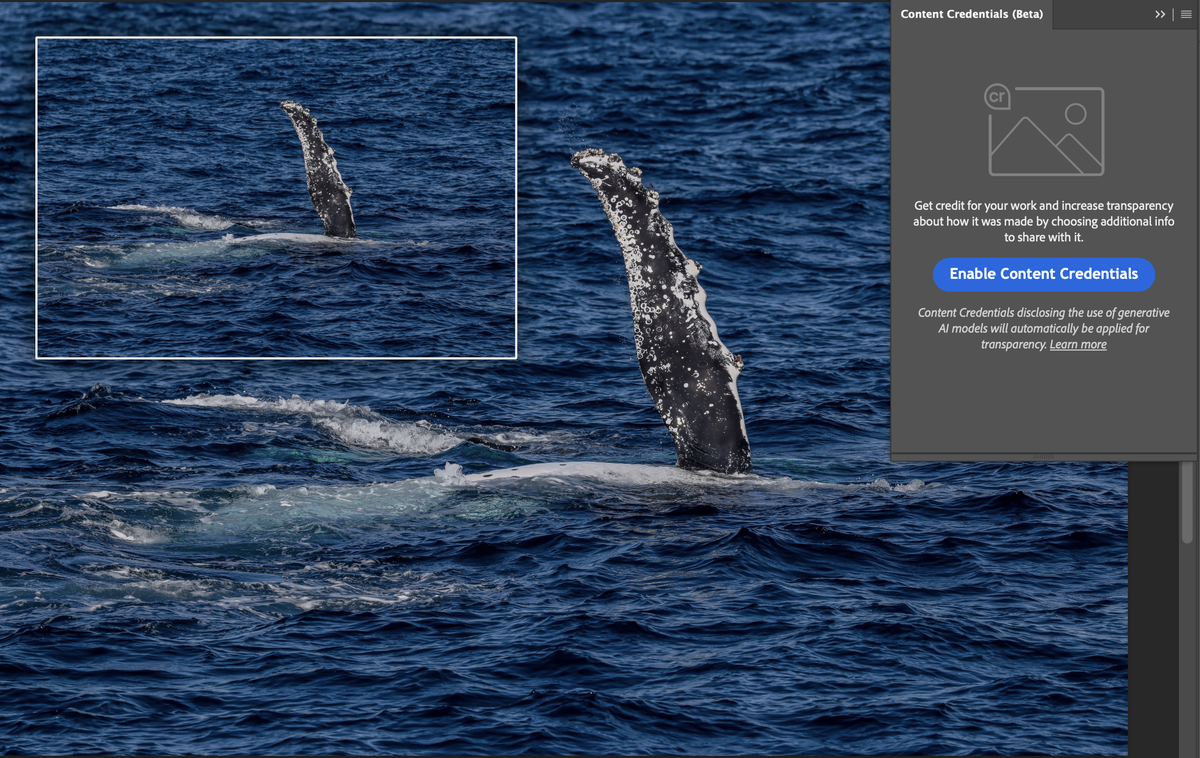

Whereas this improve to the ’About this picture’ software is a optimistic first step, extra aggressive measures might be required to maintain customers knowledgeable and guarded. Extra corporations, like digicam makers and builders of AI instruments, will even want to just accept and use the C2PA’s watermarks to make sure this method is as efficient as it may be as Google might be depending on that information. Few digicam fashions just like the Leica M-11P and the Nikon Z9 possess the built-in Content material Credentials options, whereas Adobe has carried out a beta model in each Photoshop and Lightroom. However once more, it’s as much as the consumer to make use of the options and supply correct info.

In a research by the College of Waterloo, solely 61% of individuals might inform the distinction between AI-generated and actual photographs. If these numbers are correct, Google’s labeling system received’t provide any added transparency to greater than a 3rd of individuals. Nonetheless, it’s a optimistic step from Google in opposition to the combat to scale back misinformation on-line, however it will be good if the tech giants made these labels much more accessible.