- Kioxia reveals new challenge referred to as AiSAQ which desires to substitute RAM with SSDs for AI knowledge processing

- Greater (learn: 100TB+) SSDs may enhance RAG at a decrease price than utilizing reminiscence solely

- No timeline has been given, however anticipate Kioxia’s rivals to supply related tech

Giant language fashions typically generate believable however factually incorrect outputs – in different phrases, they make stuff up. These “hallucination”s can harm reliability in information-critical duties similar to medical prognosis, authorized evaluation, monetary reporting, and scientific analysis.

Retrieval-Augmented Technology (RAG) mitigates this subject by integrating exterior knowledge sources, permitting LLMs to entry real-time info throughout technology, decreasing errors, and, by grounding outputs in present knowledge, bettering contextual accuracy. Implementing RAG successfully requires substantial reminiscence and storage assets, and that is notably true for large-scale vector knowledge and indices. Historically, this knowledge has been saved in DRAM, which, whereas quick, is each costly and restricted in capability.

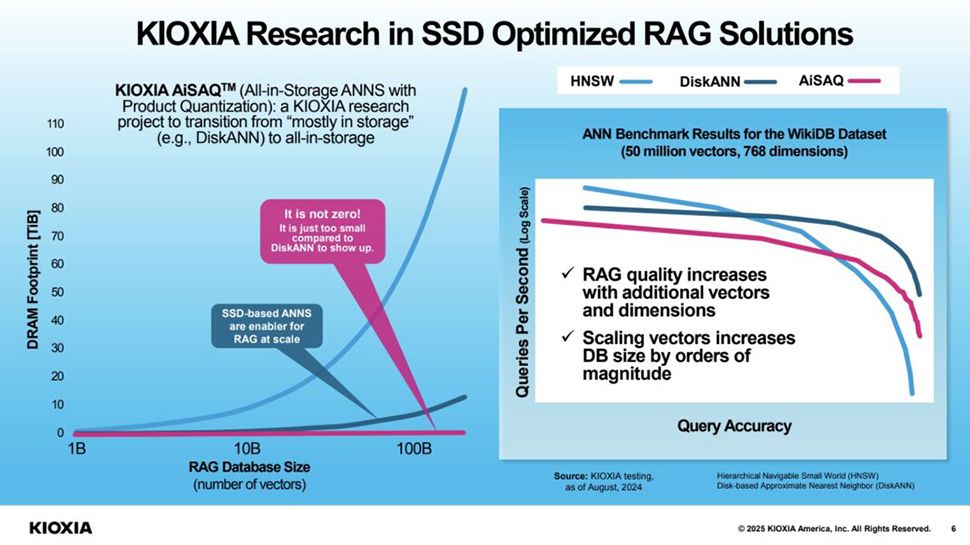

To deal with these challenges, ServeTheHome experiences that at this yr’s CES, Japanese reminiscence large Kioxia launched AiSAQ – All-in-Storage Approximate Nearest Neighbor Search (ANNS) with Product Quantization – that makes use of high-capacity SSDs to retailer vector knowledge and indices. Kioxia claims AiSAQ considerably reduces DRAM utilization in comparison with DiskANN, providing a more cost effective and scalable method for supporting giant AI fashions.

Extra accessible and cost-effective

Shifting to SSD-based storage permits for the dealing with of bigger datasets with out the excessive prices related to in depth DRAM use.

Whereas accessing knowledge from SSDs could introduce slight latency in comparison with DRAM, the trade-off contains decrease system prices and improved scalability, which may assist higher mannequin efficiency and accuracy as bigger datasets present a richer basis for studying and inference.

Through the use of high-capacity SSDs, AiSAQ addresses the storage calls for of RAG whereas contributing to the broader aim of creating superior AI applied sciences extra accessible and cost-effective. Kioxia hasn’t revealed when it plans to carry AiSAQ to market, however its protected to guess rivals like Micron and SK Hynix can have one thing related within the works.

ServeTheHome concludes, “Everything is AI these days, and Kioxia is pushing this as well. Realistically, RAG is going to be an important part of many applications, and if there is an application that needs to access lots of data, but it is not used as frequently, this would be a great opportunity for something like Kioxia AiSAQ.”